Many of us need up-to-date software and hardware in order to work efficiently. Therefore, we need to follow the pace of technological development. But, what should we do with the outdated hardware? It feels wasteful to send the old hardware to its grave or to just put it in a corner. Another, more productive, solution is to use the old hardware to build a Beowulf cluster and use it to speed up computations.

About Beowulf Clusters

In 1994, a group of researchers at NASA built a small cluster consisting of normal workstations. They called this cluster, or parallel workstation, Beowulf. Since then, the term Beowulf cluster has been used to describe clusters that are built up from commodity hardware (for example, normal workstations), using open source software. The definition is quite loose regarding the computer hardware and network interconnects. The most important point is that the workstations are no longer used as workstations, but are used as nodes in a High Performance Computing (HPC) cluster, instead.

Beowulf clusters can be used to compute all kinds of problems, but as we have mentioned earlier in the Hybrid Modeling series, in order to take advantage of the added work power in the cluster, the problem has to be parallelizable. Therefore, Beowulf clusters have been used to compute particle simulations, genetics problems, and — probably most interesting for us COMSOL Multiphysics users — parametric sweeps and large matrix multiplications.

But, why would we want to build a cluster using non-HPC hardware? One reason might be “because we already have the hardware”. For example, after an office-wide workstation or laptop upgrade has taken place, we might not know what to do with the old, outdated computers, but we still don’t want to throw them away. An alternative could be to use the concentrated computational power of idle workstations after office hours or on the weekend.

What Do We Need to Set Up the Cluster?

First of all, we need the hardware that we are going to use. For this blog post, we used our old faithful laptops as nodes, but we could just as well have used workstations or old servers. Either way, when setting up a Beowulf cluster, we should try to choose the nodes in such a way that they have similar hardware. Our laptops are no longer “performance monsters”; they are equipped with an Intel® T2400 @1.83GHz processor and 2 GB of RAM each. They are also all supplied with Ethernet network cards, so we used these to connect them together. To do this, we also need a switch. In our case, we used an old HP® 1800 switch, but, even here, we could use normal commodity hardware (such as a home office five-port switch), depending on how many nodes we are going to use.

Our Beowulf cluster, built from six old laptops and an old switch.

Since a Beowulf cluster (according to the above definition) needs an open source operating system, we installed a Linux® distribution on the laptops. Although there are specially designed operating systems for Beowulf cluster computing, it is possible to use a standard server operating system (e.g. Debian®).

When the set-up of the hardware, network, operating system, and a shared file system is done, the only step left is to install the software — COMSOL Multiphysics®. No further installation of a Message Passing Interface (MPI) or scheduler is necessary, since the COMSOL software contains all it needs in order to compute on a cluster.

Setting Up the Beowulf Cluster and Installing COMSOL Multiphysics

For our set-up, we chose Debian® Stable 6, which is one of the distributions supported by COMSOL Multiphysics at the time of writing this blog post. Next, we set up the systems. In our scenario, we tried to keep the installation as slim as possible by only installing the basic system with an additional SSH Server to get access to the cluster over the network. A desktop environment was not needed in our case; it would have reduced the performance of our Beowulf system.

After a successful installation of the operating system, we needed to set up the network and, of course, the shared file system for the compute nodes. For the shared file system, we installed the NFS server on the first node, which operates as the head node. Then, we exported the locations for the shared file system from there.

Here is one example of a set-up:

/srv/data/comsolapp For the COMSOL application

/srv/data/comsoljobs For the COMSOL cluster jobs storage for the users

On the compute nodes, we mounted these shares automatically.

Since we have no desktop environment installed on our systems, we need to use the automated installer (see page 77 of the COMSOL Multiphysics Installation Guide). For our purposes, we used the “setupconfig.ini” file from the installation media and edited it for our needs.

The most important step here is to set the “showgui” option to “0” instead of “1”. Another important aspect is the destination path. Here, we chose the network share because it is much easier to maintain and upgrade to new versions of COMSOL Multiphysics.

To start the installation, just add the parameter “-s /path/to/the/setupconfig.ini”, for example:

cd /media/cdrom/ ./setup –s /path/to/the/setupconfig.ini

Now, the text-based installer starts and the output is sent to the terminal.

To let COMSOL Multiphysics know what compute nodes can be used, we need to write a simple “mpd.hosts” file containing the list of hostnames:

mpd.hosts

cn01

cn02

...

cn06

Finally, we start the COMSOL Server on the first node, with six nodes:

//comsol server -f mpd.hosts -nn 6 -multi on

Now you can start COMSOL Multiphysics on your desktop and connect to the server.

The Results Are in: Old Hardware, Increased Productivity

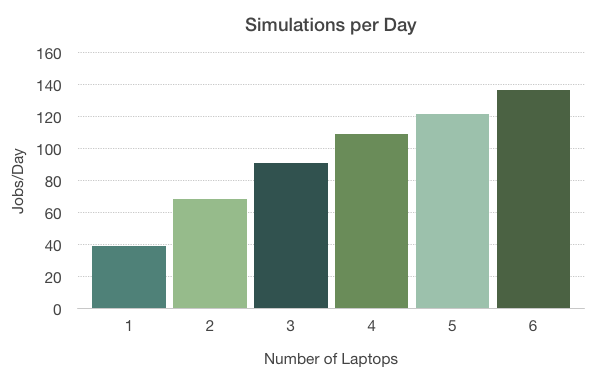

To test our “brand new” cluster, we chose a modified version of the Tuning Fork model, available in the Model Gallery. For our test run, we decided to increase the number of parameters computed in the parametric sweep to 48. We then computed the model using the COMSOL Multiphysics batch command, letting it use one to six laptops. You can see the measured total simulations per day in the graph below.

The productivity increase (Jobs/Day), taking into account the total time from opening the file to saving the result for the different number of laptops used.

As we can see, if we use six laptops, we reach almost 140 jobs per day in comparison to just under 40 jobs per day when using just one laptop. All in all, this gives us a speedup of almost 3.5. That’s impressive considering that we are using old laptops.

We have to note, though, that the measured time is not the solution time, but the total time for running the simulations. This includes opening, computing, and saving the model. Opening and saving is serial in nature, and due to Amdahl’s law (mentioned in our earlier blog post about batch sweeps), this means that we do not see the true speedup of the solver. If we were to connect to our Beowulf cluster with the COMSOL Client/Server functionality and then compare the computation times, we would obtain an even larger productivity increase compared to the numbers above.

As a conclusion, this means we can, indeed, use old hardware together with COMSOL Multiphysics to increase our productivity and speed up computations (especially parametric ones).

Debian is a registered trademark of Software in the Public Interest, Inc. in the United States.

HP is a registered trademark of Hewlett-Packard Development Company, L.P.

Intel is a trademark of Intel Corporation in the U.S. and/or other countries.

Linux is a registered trademark of Linus Torvalds.

Comments (3)

James Freels

October 24, 2015I have had this on my list to try since this blog entry was written. I wish I had tried it so much earlier ! I had the NFS mounts set up in less than an hour for 3 of my linux workstations. I was able to rsync my existing COMSOL installation to the root NFS file system (that actually took the longest time). At that point, I was able to make the server cluster to work, and then I ran a test problem. This works beautifully !! So, if you have some available linux workstations with resources that are compatible (similar memory, processors, etc.), it make sense to do this. Another benefit is to create a single installation for COMSOL instead of several installations by using the NFS server.

One question: Is is possible to specify per-compute-node settings to sort of tune a setup and spread the load evenly ? For example, specify memory, number of processors, etc. on each compute node ?

Pär Persson Mattsson

October 27, 2015Hi James,

thank you for commenting on my blog post. I’m glad to hear it worked well for you!

It is possible to use the file containing the host names (called mpd.hosts in this post) to specify a different number of processes per compute node. This makes it possible to spread out the MPI processes manually over the compute nodes.

It might also possible to use an external tool such as a scheduler to get access to more specialized settings for job distribution. In this case, the settings available are dependent on the tool you choose.

If you have any specific questions on how to combine this with COMSOL, feel free to contact us through the COMSOL support, and we can discuss your ideas in more detail.

Thank you for reading!

Pär

Tanai Marin-Alvarado

May 25, 2019Thanks for posting this! it’s very interesting.

in your example you use mpd,hosts to define the list of compute nodes, Does that mean you already had installed mpich in your linux machines? or is that a comsol setting?

nowadays mpich doesn’t support mpd and instead uses hydra as default scheduler. Is it possible for COMSOL to use hydra instead of mpd?